PCPlus: Take Care of the Pennies

published: Wed, 30-Apr-2008 | updated: Fri, 5-Aug-2016

(Back at the beginning of last year, I signed up to write a monthly column called Theory Workshop for PCPlus in England. Part of the sgreement was permission to republish the articles on my website once a reasonable length of time had passed. Well, I think a year is reasonable enough, so here's the first of those articles. It was originally published in issue 255 of PCPlus, dated May 2007. Note that with these reprints I may extend the original article with example code, but in general I won't be rewriting them. I'll also drop the strap and intro, since they're more specific for the mag and don't make much sense here.)

There's a tale, almost certainly apocryphal, about a programmer in the early days of computers who made a millions by adding a subroutine to his bank's system that gathered all the not-quite-pennies from rounding the results of calculations and passing them through to his special account. This month we'll look at a similar scenario that can fool even seasoned programmers where the floating point variables you use do not store monetary values very well and you'll find you can lose the odd penny.

Floating point. Floating point numbers use something akin to scientific notation. You get a mantissa and an exponent, with both parts being of fixed length. Using the familiar calculator notation, 1,000,000 would be stored as 1.0E6 and 0.001 as 1.0E-3 (in both examples, the mantissa is 1.0, and the exponents are 6 and -3 respectively). Although you can store a much wider range of numbers using this format than say a fixed point number, you only get a certain number of significant digits because the mantissa is of fixed length. For example, for a single variable with 24 bits for the mantissa you can only count on getting 7 significant digits. Digits after that are increasingly unreliable.

Personal computers contain a floating-point unit (FPU) as part of the main CPU. The FPU is optimized for doing calculations on floating point values: the normal addition, subtraction, multiplication, and division operations, as well as transcendental and trigonometric functions like square root, logarithms, raising to a power, sin, and cosine. The floating point values are stored in binary, not decimal, just like the integer types you know. And therein lies the problem.

The decimal arithmetic we're familiar with is positional: we fix the position of the decimal point and the digits to the left of it increment in powers of 10 the further away we get from that decimal point. So, for example, we understand 123 to be 3*100 + 2*101 + 1*102. Similarly the digits to the right of the decimal point increment in powers of 1/10 the further away we get from it, meaning that we understand 0.123 to be 1*(1/10)1 + 2*(1/10)2 + 3*(1/10)3.

When we move to the binary world of the FPU and the floating point variables in our preferred programming language, the base is 2, not 10. Digits to the left of the binary point increment in powers of 2 (and obviously there are only two possible digits: 0 and 1), and digits to the right increment in powers of ½. We're very used to this when we talk about integers (for example, we can calculate that 1101 in binary is 13 in decimal pretty quickly), but we're not so familiar with binary fractions. If we're given 0.1101 as a binary number, it takes us a little while to work out that this is equivalent to ½ + ¼ + 1/16, which is 13/16, or 0.8125. What is disconcerting though is that a lot of very familiar decimal fractions just don't have a finite expansion in binary.

Let's investigate. 0.5 decimal is the same as 0.1 binary. 0.25 decimal is 0.01 binary and 0.75 decimal is 0.11 binary. What about 0.1 decimal, say? What is its binary equivalent? The answer is 0.000110011... with the 0011 part recurring indefinitely.

To someone who hasn't thought about or seen this before, that's a mind-boggling result. Numbers that are so compact in decimal form, like 0.1 or 0.42, are infinitely long when written in binary, just as 1/3 or 1/7 are infinitely long when written in decimal. In fact, since we're talking about monetary amounts, just taking the range of 100 penny values from 0.00 to 0.99, there are only 4 which have finite binary equivalents. The other 96 are infinitely long recurring fractions in binary. (The finite four are 0.00, 0.25, 0.50, and 0.75. Can you see why?)

At this point you should be getting the gist of the problem. The FPU is not infinite is size, so at some point these infinitely long binary fractions get truncated to fit into the registers on the chip.

There are 2 main floating-point types that we can use, together with one internal type that the FPU uses in order to gain some precision. The two floating point types are called single and double, but some programming languages may use different names. The single type is 4 bytes long, with 23 bits reserved for the mantissa, 8 bits for the exponent, and a single bit for the sign. The double type is 8 bytes long, with the mantissa taking up 52 bits, the exponent 11, and again a single sign bit.

Implied bits. Although the mantissa in a variable of single type, for example, is physically 23 bits long, we can infer an extra bit to make it 24 bits long. How? Well, if we write a non-zero decimal number in scientific form, with the mantissa having a single non-zero digit to the left of the decimal (such as 3.1416E0 or 2.9979E8), we can see that the first digit can be anything from a 1 to a 9. If, however, we do the same in binary, the only non-zero digit that can occur before the binary point is 1. Since it can only be a 1, why not just assume it's there and not store it? That's exactly what the single and double types do: they have an implicit leading 1. (Note that the FPU actually assumes a mantissa nnn means 0.1nnn and not 1.nnn, but the argument still holds.)

Let's concentrate on the single type since the rounding errors will be more pronounced and it's easier to visualize what's going on with fewer bits. Although physically only 23 bits long, the mantissa for the single type actually has 24 bits to work with (see Implied bits) and, working it out on a piece of paper I found that the binary mantissa for 0.01 decimal (one penny) is 0.1010 0011 1101 0111 0000 1010

(It's hard to see the repeating part here because there aren't enough digits to spot the recurrence, but in fact the first 20 significant binary digits repeat indefinitely.)

This value is just less than the correct one since we've had to truncate an infinity of other bits. In fact, when this is converted back to decimal and taking into account the exponent (which I haven't shown), we get 0.0099999998 to 8 significant digits. That's pretty close to 0.01, but I'm sure you can see that if you add a lot of single pennies together, this slight error grows and grows.

The problem gets magnified, though, when we talk about and use rounding.

Back in our schooldays, we learned a fairly simple technique for rounding numbers. Look at the next digit from where you want to round; if it is 0 to 4, truncate, and if it is 5 to 9, add 1 to the previous digit and then truncate.

Unfortunately with our binary floating point numbers we no longer have a nice decimal representation whose digits we can look at, so we modify the rounding algorithm slightly. If we were rounding to 2 decimal places, say, we take the number, multiply it by 100 (which of course is 102, the 2 being the number of decimal places), add 0.5 (which converts exactly to a finite binary value, remember), truncate to a whole number, and then divide by 100 again. This is a pretty nice algorithm since we've managed to get rid of that decision (is the next digit less than 5 or not?).

Let's see how this works with 1.894. Multiply by 100 to give 189.4; add 0.5 to give 189.9, truncate to give 189.0; and then divide by 100 to give a final answer of 1.89, as we'd expect. Now let's try with 1.895: multiply by 100 to give 189.5; add 0.5 to give 190.0; truncate, which is still 190.0; divide by 100 to give 1.90.

Unfortunately, once we represent the numbers in binary we hit all kinds of problems, and generally right at the point where the next digit is a 5. The reason is that, in general, finite decimal values do not have finite binary values. They have to be truncated to fit. This means that the binary value is either just slightly more, or slightly less than the true decimal value.

Taking our example of 1.895, we find that when we store this value into a single variable we'll actually be storing 1.89499998 to 8 decimal places, which is a little less than the correct value. I'm sure you can see what happens when we apply our algorithm now: we'll get the result that 1.895 rounded to two decimal places is 1.89. This is of course wrong, and if this were a monetary value from a calculation, we've just lost a penny.

The solution to all these problems is to either use a proper decimal

type to store monetary values (in C#, for example, this is the

System.Decimal type), or to store values as a whole

number of pennies and format them properly for display purposes,

essentially by dividing by 100 when needed. This latter scheme is

known as scaling. Note that using the double type instead of the

single type is not going to help. The problems I've described are

still there for double.

So, unless you're planning on scooping up all those almost-halfpennies and putting them in your bank account, please beware of using floating point variables to hold monetary amounts, and if you do, at least scale them.

Spotlight on... Converting decimals to binary

For converting a fractional decimal to binary, we continually multiply the fractional part by 2, lopping off the integer parts when we get to them. We start off with "0." as our binary number and, at each stage, if the running total is less than 1, we add a 0 to this, otherwise, we add a 1 and then discard the integer part from our running total.

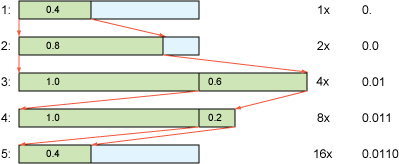

This can be shown geometrically by zooming in on the unit block. Let's convert 0.4 to binary.

- The start: the whole block is 1 unit long, and the number is 0.4 of that. Double the zoom.

- The block is now 1/2 unit long. No overflow. Double the zoom.

- The block is now 1/4 unit long, and we've overflowed. Therefore the original number is at least 1/4. Chop this off, and double the zoom on the remainder.

- The block is now 1/8 unit long, and we overflowed again. So the remainder was at least 1/8. Chop and zoom.

- The block is now 1/16 units long. No overflow. Notice though we're now going to repeat the pattern.

So 0.4 is 0.0110..., with the 0110 repeating indefinitely.